Like all predictive coding tools, eDiscovery AI needs to be trained. Without providing instructions to the machine, how could it know what you are looking for?

The only difference is training AI is easy. Really easy.

Instead of having to provide thousands of positive and negative examples for training, you just have to tell the AI what you want. In normal, natural language. Imagine you are talking to a real human document reviewer. How would you instruct them.

"Find all documents where any of our executives are discussing how to price widgets."

"Identify any documents where a discussion took place about the company's retirement plans"

"We are looking for any documents where an employee of Acme said something inappropriate to John Smith."

"Can you find any documents where someone makes a statement properly suggesting we should discriminate against people who support the Green Bay Packers."

Easy enough, right? It is pretty simple. But as attorneys you know that isn’t good enough. What if we don’t really understand what we are looking for? What if internally they call them “fidgets” instead of “widgets?” You could end up having them looking for the wrong thing or missing things altogether.

This isn’t a new problem. We do this with document review. You can’t just walk into the first day of a new document review training without any research, or understanding your date. You spend days meticulously preparing your reviewer handbook.

That’s all we have to do. But it’s way easier now because we can use AI to guide us.

So what we are really doing is training the instructor. We need them to understand their data. What if Product X was previously referred to as Product T until its owner changed his mind at the last minute? Did you know it was previously called Product T? We have to find out what we are looking for, and what we are not looking for. Today, we are going to show you how to do that.

Watch the video, or read the story below.

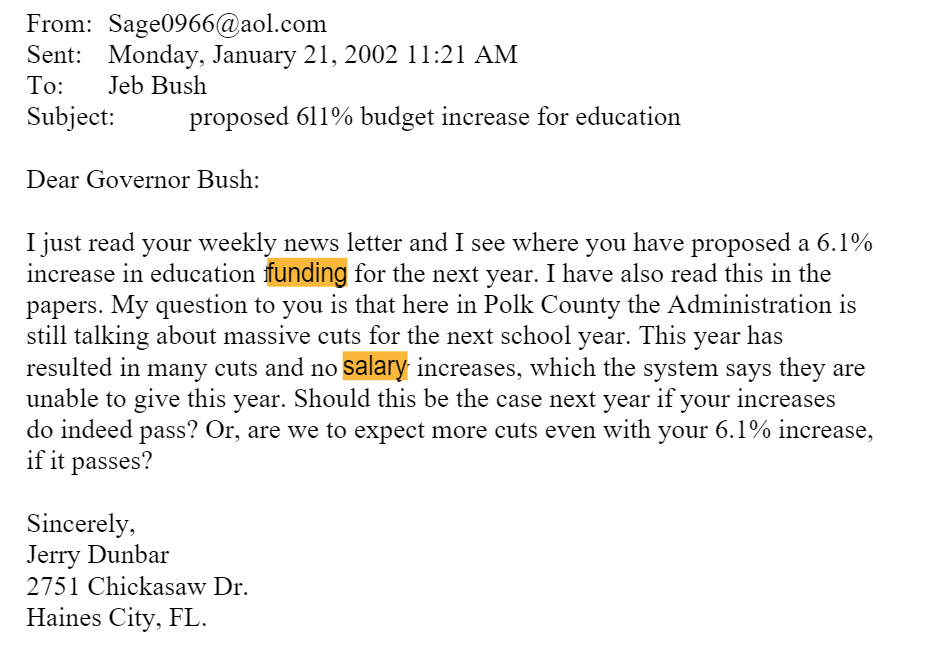

Background: For this hypothetical we are doing a review of 67,902 documents in the Jeb Bush dataset, which is publicly available from the time he was governor of Florida. We are looking for documents that discuss funding schools in Florida. Also, just for fun, we are going to see how many people in Florida write in to Jeb to encourage more school funding v. the number of people who write to suggest less school funding. To avoid having to review documents, we are using the TREC answer key as a guide. In real life, you’re going to have to review some docs.

Let’s get started!

Round 1 Instructions

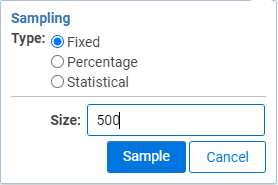

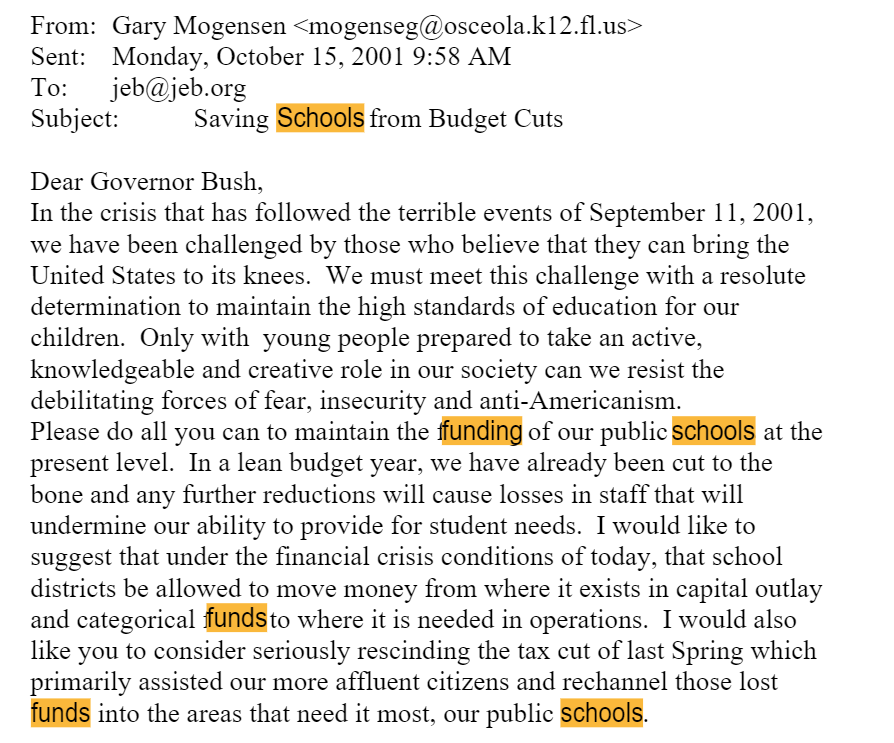

The first step is to identify the documents you need to review. From that set, we are going to take a random sample of documents. We selected 500.

The first step is to identify the documents you need to review. From that set, we are going to take a random sample of documents. We selected 500.

Then we run the documents through eDiscovery AI.

This is when we are presented with the opportunity to enter your instructions. Let’s come up with something pretty basic, but very broad:

"All documents with any mention of education funding or money going to schools"

"All documents that suggest increases in school funding."

"All documents that suggest decreases in school funding."

We run these documents and look at the results…

Round 1 Results

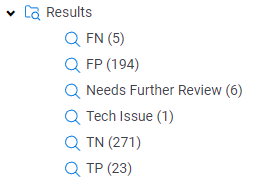

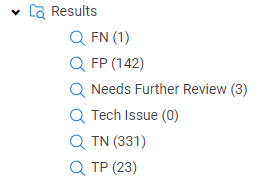

The results are in!

The results are in!

The first step is to folder up the True Positives, True Negatives, False Positives, and False Negatives. Remember, we have the TREC answer key to rely on so we don’t have to actually review the documents like you would in a real matter.

A quick guide on the confusion matrix:

True Positive = Instances where the AI classified the document as Relevant and the Answer Key also classified the document as Relevant.

True Negative = Instances where the AI classified the document as Not Relevant and the Answer Key also classified the document as Not Relevant.

False Positive = Instances where the AI classified the document as Relevant but the Answer Key classified the document as Not Relevant.

False Negative = Instances where the AI classified the document as Not Relevant but the Answer Key classified the document as Relevant.

We can use these to calculate Recall and Precision for our first run.

We can use these to calculate Recall and Precision for our first run.

Recall = TP/(TP+FN)

Recall = 23/(23+5)

Recall = 82%

Precision = TP/(TP+FP)

Precision = 23/(23+194)

Precision = 11%

The recall score is exactly where we need to be, but the precision definitely needs some work.

Now we want to take a quick look through the documents to see what we can improve.

Technical Issue

There is only 1 document that is a tech issue, and a quick review shows us that it exceeds the allowed file size limit.

![]()

While we could raise the file size limit and potentially review this document, we are going to leave it for now.

Needs Further Review

Next week look through the 6 docs that need further review.

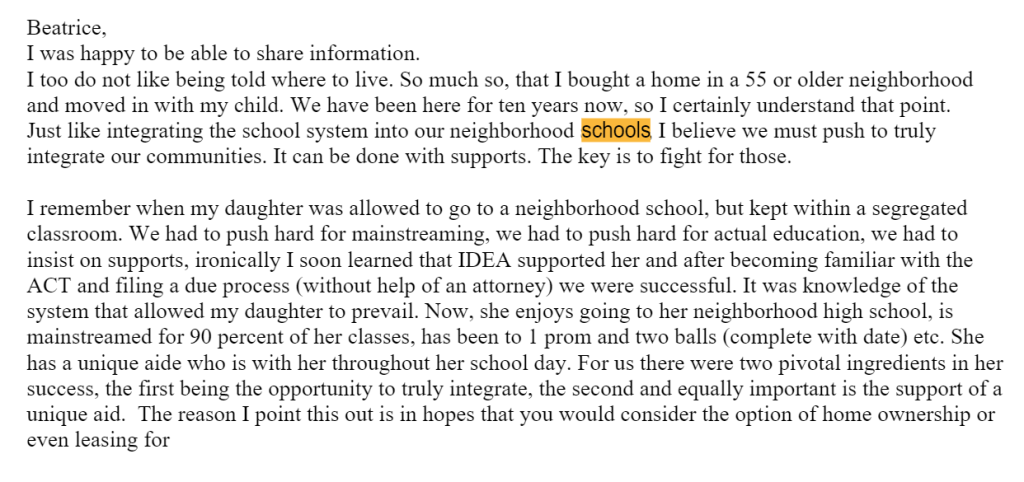

What we discover are documents without much content. For example:

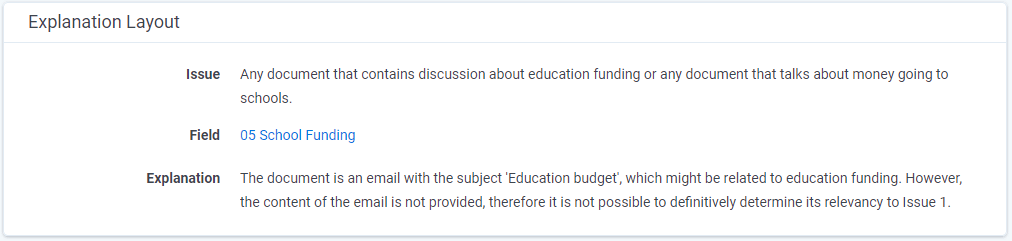

The explanations make it really easy to understand why this was classified as Needs Further Review:

Most of the time a document gets classified as Needs Further Review, it is because the system is uncertain how to classify it. Must like how a human reviewer might come up and ask some questions about how to categorize a document.

Since there is a very low volume, and all of them are borderline cases, we are OK with these being marked as Needs Further Review, but we will try to clarify a little more in our next set of instructions.

False Negatives

False negatives are the worst. Not just because they sound bad, but because it means we are missing Relevant material. I’d rather have 5 false positives than 1 false negative.

The purpose here is to find out what we missed so we can update our instructions to capture them on the next round.

I added some highlighting to help us find key topics as we look through these 5 documents.

We immediately see that a few topics were missed:

- School Vouchers

- Scholarships

- Charter Schools

- Teacher Salaries

This is perfect and gives us what we need to improve our instructions on the next round.

False Positives

False positives aren’t quite so terrible. We didn’t miss anything, but we labeled documents as Relevant when they were not.

A quick review of these documents determined a couple things:

- Almost every document returned was related to school funding.

- The TREC answer key missed a lot of Relevant documents.

Here are a couple of the most obvious examples:

The great news is there is nothing here that is obviously Not Relevant. Of all 194 documents, the only that were truly Not Relevant were close calls that I have no problem including as Relevant. There were only a few that discussed schools, but didn’t have a direct reference to funding. We can update our instructions to fix that.

True Positives and True Negatives

I looked through a couple examples and didn’t see anything I disagreed with. On a live matter we would need to sample and review a larger set, but for this example we are going to skip over these.

Round 2 Instructions

Now we just have to take what we learned in Round 1 and update our instructions:

"All documents with any mention of education funding or money going to schools. Any discussion of School Vouchers should be considered Relevant. Any discussion of Charter Schools should be considered Relevant. Any discussion about Scholarships for school should be considered Relevant. Any discussion about teacher salaries or class size or money for textbooks should be considered Relevant. If it does not include a direct reference to funding, it should be considered Not Relevant."

"All documents that suggest increases in school funding."

"All documents that suggest decreases in school funding."

Then we take another random sample of 500 documents and we are off to the races!

Then we take another random sample of 500 documents and we are off to the races!

Round 2 Results

The results are in!

The results are in!

The first step is to folder up the True Positives, True Negatives, False Positives, and False Negatives. Remember, we have the TREC answer key to rely on so we don’t have to actually review the documents like you would in a real matter.

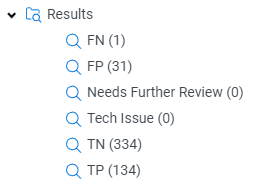

The results already look much better.

However, we know many of the classifications on the answer key are not accurate, so we are going to take a look before we try to calculate our metrics.

Technical Issue

There are none to review.

Needs Further Review

Next week look through the 3 that need further review.

All were generally on topic, but lacked any Relevant content. I changed these to Not Relevant.

False Negatives

False negatives are still the worst, but now we only have one.

In reviewing this long document, there was a very small section discussing schools, but nothing I could find related to funding. There was also a reference to vouchers, but not in the context of schools.

Let’s just say I would have marked this as Not Relevant, but for this exercise we’ll allow it so nobody accuses us of cheating.

Here is the (maybe) relevant portion:

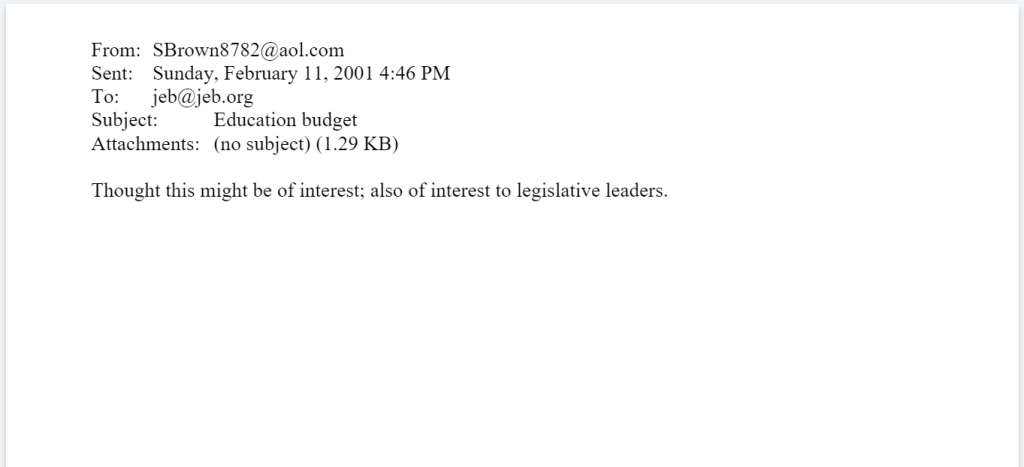

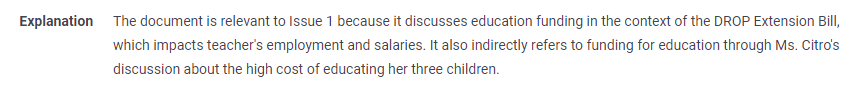

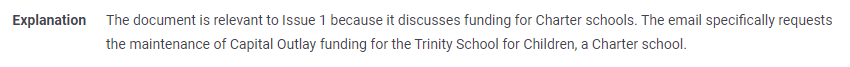

False Positives

Though the False Positives are much lower than the first pass, now we need to look through them to confirm they are accurate.

The results confirmed the TREC answer key had missed a lot of Relevant content.

This time we reviewed every single one.

I’ll save you the pain of having to look at these, but if you are truly interested, you can see some in the video.

The lifesaving part was having Explanations to show why each document was in fact relevant. A quick look at the explanation would point out the relevant portion:

True Positives and True Negatives

Again, we are going to shortcut this process a little bit. I looked through a couple examples and didn’t see anything I disagreed with. On a live matter we would need to sample and review a larger set.

Final Results

We can use these to calculate Recall and Precision for our first run.

We can use these to calculate Recall and Precision for our first run.

Recall = TP/(TP+FN)

Recall = 134/(134+1)

Recall = 99%

Precision = TP/(TP+FP)

Precision = 134/(134+31)

Precision = 81%

Now, we are at a point we are comfortable with our instructions.

Conclusion

The only remaining step is to run these revised instructions across the rest of the review set. After the rest have been reviewed, we can use a random sample to calculate our final recall and precision numbers.

A quick disclaimer:

The precision and recall numbers we calculated will provide us with enough information for this exercise that we can feel comfortable running the instructions across the entire set, however, we will still need to prepare a final random sample to calculate our official Recall and Precision metrics. The steps taken above are not sufficient to be defensible, but we will show that process in a future blog.